Chamber 🏰 of Tech Secrets #16

Navigating Complexity in Systems Architecture

The Chamber 🏰 of Tech Secrets has been opened. It was a busy weekend here as I had my aforementioned wedding and am now officially a married man. Fear not. Even on wedding weekend, the Chamber of Tech Secrets will be reliably delivered to your inbox every Monday for the consistent and low price of $0. Thank you for reading! 🙏

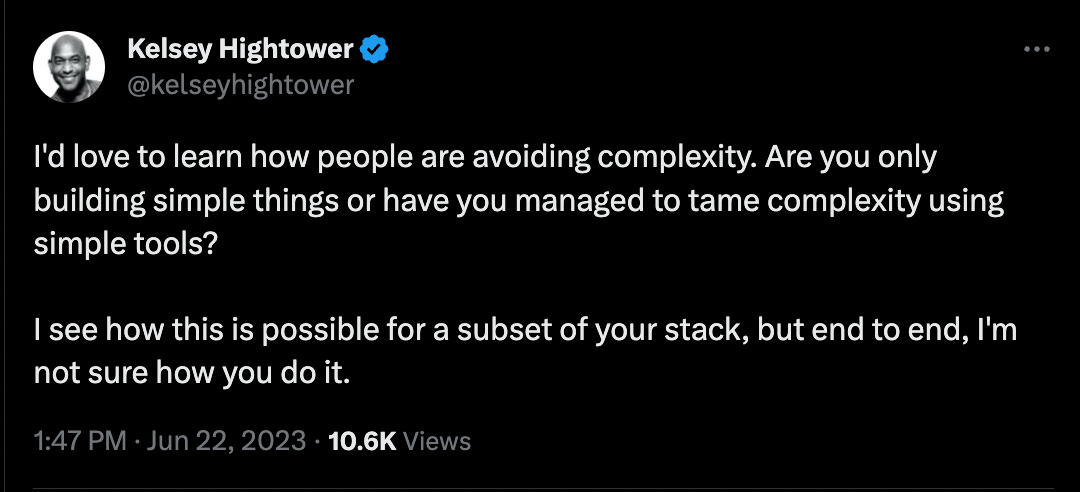

Is Complexity Avoidable?

Complexity is a frequent topic of discussion in systems architecture and delivery. There are many ways it can come to exist and be managed and mitigated.

Complexity in Computer Science

What is complexity? The notion of complexity in computer science originated from the field of computational theory, which is concerned with the resources needed for computation, such as time and space.

Time complexity describes the amount of computational time an algorithm takes to run relative to the size of its input. This is usually expressed using “Big O” notation, which describes the upper bound of the time complexity in a worst-case scenario.

Space complexity refers to the amount of memory an algorithm uses in relation to the input size. This helps in understanding the scalability of an algorithm.

Transposing these concepts into systems architecture, complexity can be thought of as the mental time and space required to understand, reason about, and manage a system.

Some imperatives

First, lets begin by understanding how complexity shows up in systems and understand its nature so that we can explore how to minimize it in our systems architectures.

No solution has no complexity: Any problem worth solving carries with it some degree of complexity that cannot be destroyed or eliminated. Except this one. We often talk about solving a problem as simply as possible, but once we start writing code we are on the road towards some degree of complexity.

Complexity can shift: Furthermore, complexity can be shifted elsewhere (a cloud provider, a SaaS solution, a platform team) but it leaves a residual in the form of a new dependency, whether technical or human. Complexity can also be hidden in abstractions. Abstractions are a great tool to help achieve the ability to reason about a sophisticated system, but they are still a shift (not removal). When said abstractions change or break, the complexity re-emerges.

Sometimes you have to build “not simple” things: Complexity is not inherently bad as we sometimes make it out to be. It is a sign of a significant problem that needs solving.

Complexity is multi-origin: Complexity can surface from different places like software components, libraries, external dependencies, APIs built by other teams, identity or secrets management, persistence technologies, distributed computing, infrastructure, platforms, abstractions, monitoring and metrics collection or (very often) humans. It could be the result of The Second-System Effect which states that small, elegant, and successful systems often have successors that are over-engineered. The second iteration of a system is often bloated and loaded with features.

Complexity is composite: The mono repo in a single language with minimal dependencies is about as simple as software gets. Layer in more programming language, services, databases, authentication mechanisms, or humans developing and you start to get complexity. Complexity is composite and the result of many different components that have to interact with each other seamlessly, handling complex / cascading failure scenarios, or distributing a problem across many discreet locations.

Navigating Complexity

How might you think about navigating complexity? Here are a few ideas from my own experience.

Complexity can be moderated by tools and techniques that help manage it: For instance, breaking a complex task down into simpler, manageable parts can make it easier to handle. This was one of the positive byproducts and likely motivators for microservices architectures. Microservices done well create clear bounded contexts that are staffed by a capable team that is able to fully understand their context.

Avoid the Undisciplined Pursuit of More: All the new technologies, languages, frameworks, platforms, etc. are tempting. They are fun to explore. Be careful to not get sucked into the trap of always adding more and more components to your architecture, whether in a single system or across an enterprise architecture. Ask yourself “What do we need to make this work?” and just do that.

Build in a way your team understands and that you anticipate others in the future will understand. Simple code and minimum viable documentation can go a long way in making it possible to reason about or operate a system. For a hot and controversial take, I think “syntactical sugar” is cool but also a net negative. Consider the following python code from this tutorial:

contexts = [x['metadata']['text'] for x in res['matches']]I don’t use Python very often and I wasn’t sure what this code was doing. It kind of makes my brain hurt when I look at it. Is essentially equivalent to this slightly more verbose code using a for-loop:

contexts = []

for x in res['matches']:

contexts.append(x['metadata']['text'])The second block is three lines instead of one which is a slight downside, but I understand it immediately.

Invest in defining clear, well-sized domains: It's crucial to delineate clear working boundaries for your teams, particularly in an enterprise setting where several teams might overlap in their areas of focus. By establishing these boundaries and interfaces from the outset, you prevent many of the human-induced complexities that frequently arise in enterprise software development. This principle is not limited to large corporations; it is equally applicable to startups and small businesses. Engaging in tasks outside of your core mission results in unnecessary accumulation of code, documentation, and potentially extraneous infrastructure which are liabilities. Adopt an essentialist approach to your problem space to effectively minimize complexity, both for the present and future.

Thoroughly decompose the problem but don’t overly decompose the solution: Decomposition of a problem into small pieces that are easy to work on and can be completed in a day is the ideal. In his article “The Magical Number Seven, plus or minus Two” cognitive psychologist George Miller states that “the span of absolute judgment and the span of immediate memory impose severe limitations on the amount of information that we are able to receive, process, and remember. By organizing the stimulus input simultaneously into several dimensions and successively into a sequence of chunks, we manage to break (or at least stretch) this informational bottleneck." Decompose the problem, but don’t go microservices crazy in the process.

Achieve rapid feedback cycles on what is built: Do agile for real. Invest in pipelines and testing that you trust and that let you work on small, simple chunks of work that are rapidly deployed to production and deliver rapid feedback to the team. Big releases are complex, especially if the system is large and complex.

Think about complexity as a tradeoff and make sure the value you obtain from its introduction is balanced with the cost incurred by your system and / or team. Progress towards more complex solutions (like Kubernetes) when the problem is real and well-understood as opposed to proactively. We ran our entire Chick-fil-A One mobile app backend primarily on AWS Elastic Beanstalk and DynamoDB for years. It was simple and it worked. We grew into Kubernetes later as our needs for scale demanded it (or similar).

A brief case study

One architecture that I am proud of is Edge Computing at Chick-fil-A. Looking at the problem from outside, many assumed that our solution was a bit cliche and definitely was overly complex for a fast food restaurant chain… and understandably so. An architecture with ~2,800 Kubernetes clusters in remote locations with unreliable network connections and no IT staff sounds like it could be a disaster. Kubernetes is under constant criticism for its complexity in the cloud, so all the more so at the edge.

Our solution did require some complex problem solving. We had a goal to be able to run operationally critical workloads when our WAN connections were unavailable. To do that we wanted to drive single points of failure out of our restaurant architecture, which meant we would need multiple hardware nodes. To run multiple nodes we needed something to perform as a scheduler for our workloads. That is exactly what we used Kubernetes (k3s) for.

We used a lot of self-restraint and tried to keep our footprint to this primary purpose. In addition to that, we know that persistence is often the most difficult element in a distributed system. While we do offer redundant persistence (across our nodes) we elected to offer no SLAs on persistence and to take a “best-effort” approach. We encourage teams to exfiltrate their data to the cloud whenever they are online (which is most of the time) and be capable of rehydrating any state that is needed should the app die or be killed.

Are there other potential solutions? Sure. We could run a single node and have no clustering. We could avoid edge compute completely and just use the cloud whenever the internet works. However these approaches would not have allowed us to meet our actual business goal. We embraced just enough complexity to solve our problem and I think in systems building, that is the best we can do.

I just ran across this John Gall quote in the last month that I thought you'd enjoy.

"A complex system that works is invariably found to have evolved from a simple system that works. The inverse proposition also appears to be true: A complex system designed from scratch never works and cannot be made to work."

--John Gall

Excellent writing and I enjoyed how you showed us your real world application example.

Also, congratulations on your wedding!! 🎉 Say it with me- "Yes Dear. Yes Love. I am sorry." 🙏🏽